Biography

Jinlai Xu is a Researcher/Engineer at Infrastructure System Lab of ByteDance US. He is currently working on cutting-edge technologies which will evolve the infrastructure to a new generation, including but not limited to Next Generation ML/Big Data infra, Graph Learning/Computing, Cloud Native & Serverless Infra and Hyper-Scale Heterogeneous Cluster Management.

He got his PhD from University of Pittsburgh in 2021. His research interests include Big Model Training/Inference/Finetune Framework, Serverless Computing, Distributed Systems, Fog/Edge and Cloud Computing, Stream Processing Optimization and Blockchain-based Techniques. His PhD advisor is Balaji Palanisamy. He got his bachelor and master from China University of Geosciences and his undergraduate and graduate advisor is Zhongwen Luo.

- Big Model

- Serverless Computing

- Fog/Edge Computing

- Cloud Computing

- Distributed Systems

- Stream Processing Optimization

- Reinforcement Learning

- Blockchain

PhD in Infomation Science, 2021

University of Pittsburgh

M.Phil in Software Engineering, 2015

China University of Geosciences

BEng in Software Engineering, 2012

China University of Geosciences

Research Topics

Big Model training/inference/finetune Framework

Ray Project

Low-latency Stream Processing/Resilience/Elasticity in Edge Computing

Resource Allocation/Management/Sharing

Resource Allocation/Management/Sharing

RL on Systems

Incentive Deisign for Edge Resource Sharing

Featured Publications

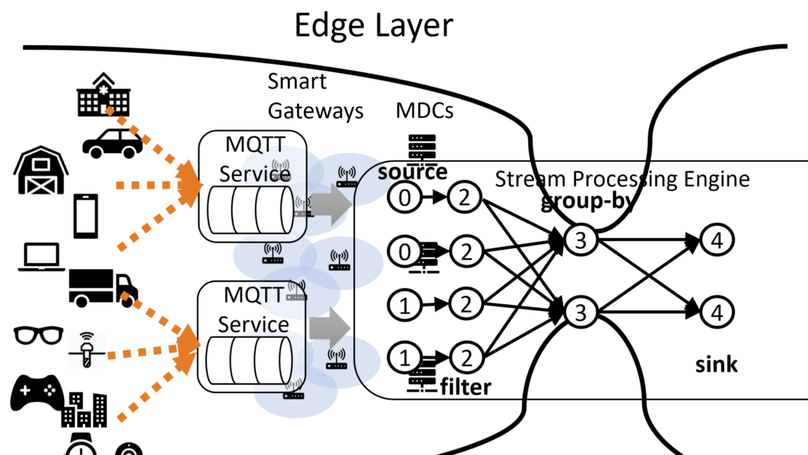

The proliferation of Internet-of-Things (IoT) devices is rapidly increasing the demands for efficient processing of low latency stream data generated close to the edge of the network. Edge computing-based stream processing techniques that carefully consider the heterogeneity of the computational and network resources available in the infrastructure provide significant benefits in optimizing the throughput and end-to-end latency of the data streams. In this paper, we propose a novel stream query processing framework called Amnis that optimizes the performance of the stream processing applications through a careful allocation of computational and network resources available at the edge. The Amnis approach differentiates itself through its consideration of data locality and resource constraints during physical plan generation and operator placement for the stream queries. Additionally, Amnis considers the coflow dependencies to optimize the network resource allocation through an application-level rate control mechanism. We implement a prototype of Amnis in Apache Storm. Our performance evaluation carried out in a real testbed shows that the proposed techniques achieve as much as 200X improvement on the end-to-end latency and 10X improvement on the overall throughput compared to the default resource aware scheduler in Storm.

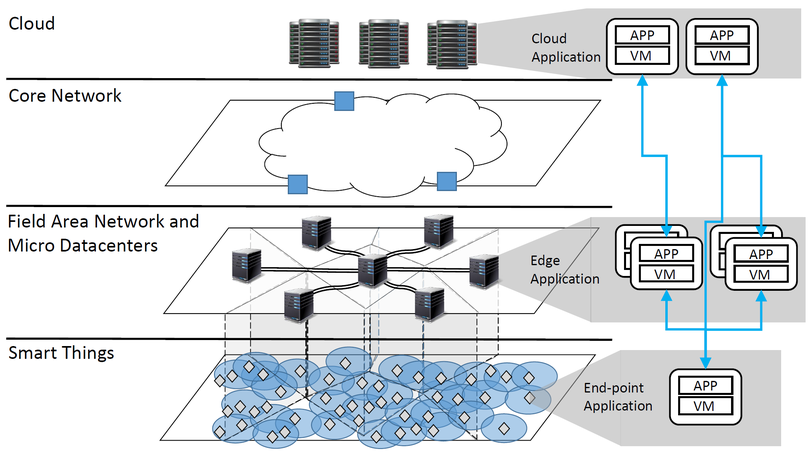

In the Internet of Things(IoT) era, the demands for low-latency computing for time-sensitive applications (e.g., location-based augmented reality games, real-time smart grid management, real-time navigation using wearables) has been growing rapidly. Edge Computing provides an additional layer of infrastructure to fill latency gaps between the IoT devices and the back-end computing infrastructure. In the edge computing model, small-scale micro-datacenters that represent ad-hoc and distributed collection of computing infrastructure pose new challenges in terms of management and effective resource sharing to achieve a globally efficient resource allocation. In this paper, we propose Zenith, a novel model for allocating computing resources in an edge computing platform that allows service providers to establish resource sharing contracts with edge infrastructure providers apriori. Based on the established contracts, service providers employ a latency-aware scheduling and resource provisioning algorithm that enables tasks to complete and meet their latency requirements. The proposed techniques are evaluated through extensive experiments that demonstrate the effectiveness, scalability and performance efficiency of the proposed model.

Recent Publications

Teaching Experience

Teaching Assistant , September 2015 - Present

- University of Pittsburgh

- Cloud Computing (2017 Spring, 2018 Spring, 2019 Spring)

- Instructor: Prof. Balaji Palanisamy

- Information Security & Privacy (2017 Fall)

- Instructor: Prof. Balaji Palanisamy

- Information Security & Privacy (Online Course) (2018 Fall)

- Algorithm Design (2018 Fall)

- Instructor: Prof. Hassan Karimi

- Cloud Computing (2017 Spring, 2018 Spring, 2019 Spring)

- University of Pittsburgh

Teaching Assistant , September 2013 - January 2014

- China University of Geosciences

- Advanced Programming Language (JAVA)

- Instructor: Prof. Shengwen Li

- Advanced Programming Language (JAVA)

- China University of Geosciences

Professional Services

- Journal Review

- Transactions on Services Computing (TSC)

- Concurrency and Computation: Practice and Experience (CCPE)

- International Journal of Cooperative Information Systems (IJCIS)

- Information Systems Frontiers (ISFI) : IRI - Special Issue on Foundations of Reuse

- PLOS ONE

- TELKOMNIKA (Telecommunication, Computing, Electronics and Control)

- Conference Review

- International Workshop on Internet-scale Clouds and Big Data (ISCBD)

- IEEE International Conference on Communications (ICC)

- Conference External Review

- IEEE World Wide Web (WWW)

- International Conference on Distributed Computing Systems (ICDCS)

- ACM International Conference on Information and Knowledge Management (CIKM)

- Conference Volunteer

- IEEE 18th International Conference on Information Reuse and Integration, San Diego, CA, USA. Aug 4 - 6, 2017

- The 37th International Conference on Distributed Computing Systems (ICDCS 2017), Atlanta, GA, USA. June 5 - 8, 2017

- IEEE 17th International Conference on Information Reuse and Integration (IRI 2016), Pittsburgh, PA, USA. Jul 28 - 30, 2016

- IEEE 2ed International Conference on Collaboration and Internet Computing (CIC 2016), Pittsburgh, PA, USA. Nov 1 - 3, 2016

- Conference Webmaster

- 7th IEEE International Conference on Collaboration and Internet Computing (CIC 2021)

- Third IEEE International Conference on Trust, Privacy and Security in Intelligent Systems, and Applications (TPS 2021)

- Third IEEE International Conference on Cognitive Machine Intelligence (CogMI 2021)

- International Workshop on Internet-scale Clouds and Big Data (ISCBD 2017)

- IEEE 18th International Conference on Information Reuse and Integration (IEEE IRI 2017)

- International Workshop on Internet-scale Clouds and Big Data (ISCBD 2016)

Research Experience

- Working on cutting-edge technologies which will evolve the infrastructure to a new generation, including but not limited to Next Generation ML/Big Data infra, Graph Learning/Computing, Cloud Native & Serverless Infra and Hyper-Scale Heterogeneous Cluster Management.

- Focus on projects related to serverless computing (Ray project), Graph Computing, Big Model training/inference/finetune Framework, etc.

- Reviewed related literatures (mainly in Distributed Systems, Cloud Computing, Edge Computing, Stream Processing, Reinforcement Learning and Blockchain-based Techniques)

- Focus on resource management problems in Edge and Cloud Computing to achieve low-latency stream processing

- Publish papers on these topics

- Reviewed related literatures (mainly in Cloud Computing)

- Constructed the cloud computing platform for our faculty:

- Designed the virtualization solution for the cluster. (based on Xen)

- Deployed Hadoop and related application(Hive, Spark, Solr …) on the cluster.

- Supported the experiment of Deep Learning in our lab.

- Studied MapReduce programming model and did research on it:

- Read the source code of MapReduce in Hadoop project.

- Proposed a new method to reuse the intermediate results automatically and data-awarenessly and implemented the prototype system by modifying the core code of MapReduce.

- Evaluated the performance on the cluster and got the result that the system could improve the performance up to 24.6% compared with the previous optimization work.

- The paper is published on CCPE. (Title: MEMoMR: Accelerate MapReduce via reuse of intermediate results)

- Managed the cluster in our faculty:

- Allocated the virtual machines and network resource.

- Supported a mirror site on the cluster (http://mirrors.cug.edu.cn).

- Reviewed related literatures (mainly in Computer Vision and Robotics).

- Participated in The 9 th Robot Soccer Tournament of China and The Tryouts for FIRA in Changchun in freshmen year.

- Studied the architecture and implementation of ROS(The Robot Operating System) and preliminarily deployed it on the robots control panel (Version: RB100 by RoBoard).

- Successfully applied for The National College Students Innovation Experiment Program (with funding):

- Topic: Small Model Aircraft Autopilot System and Aerial Photo Research

- Chose Quadrotor(an aircraft with four rotors) as the carrier platform of the research.

- Studied the theory of balancing the Quadrotor with MikroKopter(one of the most famous open source UAV projects).

- Studied and implemented the point clouds registration algorithm ICP and RANSAC on ROS.

- Used ASUS Xtion PRO (a device like Kinect) to get the point cloud data and evaluated the algorithm.

- Wrote graduation thesis based on this topic.(Title: the Design and Implementation of the Quadrotor Autopilot and 3-D Point Cloud Generation and Processing System)